In this article, we’ll cover what deepfakes are, how they work, and what are the dangers we can expect from deepfakes.

Let’s dive right into it!

What Are Deepfakes or Deepfake AI?

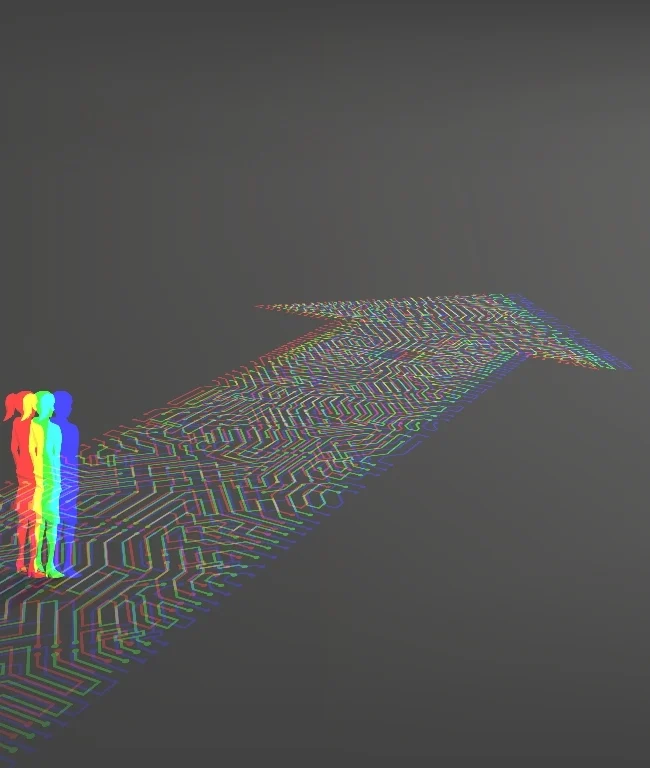

Deepfakes or Deepfake AI is a type of artificial intelligence that crafts convincing fake images, audio, and videos by merging the power of “deep learning” with the concept of generating deceptive content.

This technology can seamlessly substitute one individual for another in pre-existing content or fabricate entirely new material, portraying people engaging in actions or uttering words they never actually did.

The primary concern with deepfakes stems from their capacity to disseminate misinformation that appears authentic.

How Are Deepfakes Created?

Deepfakes are created using a sophisticated process that involves intricate algorithms and neural networks.

One common technique utilizes a type of neural network called an autoencoder. An autoencoder consists of an encoder and a decoder.

The encoder takes an input, such as an image or video frame, and compresses it into a condensed representation called a latent space. This latent space contains essential features of the input but in a more compact form. The decoder then reconstructs the original input from this condensed representation. In the context of deepfakes, the autoencoder is trained on a vast dataset of real images, allowing it to learn the distinctive features of human faces.

Another key approach involves the use of Generative Adversarial Networks (GANs). A GAN consists of a generator and a discriminator

The generator generates synthetic content, such as a fake image or video frame, while the discriminator evaluates whether the content is real or generated. The generator continually refines its output to fool the discriminator, and the discriminator becomes adept at distinguishing real from fake.

These techniques are combined.

Role of GAN in Deepfake AI

The generator, often based on a GAN, produces realistic content by learning from the patterns and features identified by the autoencoder. The discriminator helps refine the generated content, making it more convincing.

This intricate interplay between autoencoders and GANs allows deepfake algorithms to create highly convincing fake media, replacing faces in videos or generating entirely new content that can be challenging to differentiate from reality. The continuous refinement of these algorithms contributes to the growing sophistication of deepfake technology.

How Do Deepfakes Work?

As touched upon earlier, Deepfakes replicate facial and expressive data in a manner not easily discernible to the naked eye. On the other hand, Generative Adversarial Networks (GANs) generate data through deep learning, employing both generators and discriminators.

Presently, there are applications like DeepFaceLab that autonomously learn from provided inputs, meticulously capturing facial expressions layer by layer to manipulate data effectively.

In essence, it functions as a video editing tool, seamlessly integrating with the original video to replace faces as desired.

Although achieving higher accuracy will require additional time, the technology is already gaining substantial traction in the film and entertainment industries. Moreover, marketers are exploring its potential for creating diverse promotional content without the need for hiring models or actors.

Additionally, there are smaller-scale project applications utilizing Deepfake technology for amusing face-swapping and the creation of humorous videos.

Some Common Use Cases of Deepfake AI

The versatility of deepfakes extends across various domains:

Entertainment: Deepfakes add a touch of amusement in satire and parody, offering viewers a dose of humor even when they recognize the content’s fictional nature.

Caller Services & Customer Support: In the realm of caller response services, deepfakes step in to provide tailored and personalized responses, especially in scenarios involving call forwarding and receptionist duties. They are also leveraged in customer phone support, incorporating fake voices for routine tasks like checking account balances and addressing complaints.

Fraud and Blackmailing: The darker side sees deepfakes being misused to impersonate individuals, aiming to illicitly acquire sensitive personal information such as bank accounts and credit card details. Additionally, these deceptive techniques find application in activities like blackmail, reputation damage, and cyberbullying.

Misinformation and Political Manipulation: The realm of deepfake videos extends to the manipulation of public opinion, where politicians or trusted figures are featured in fabricated scenarios. This unsettling trend has the potential to significantly impact political discourse, injecting an element of misinformation and influencing the perspectives of the public.

How Deepfake AI Poses Cybersecurity Threats

As we march ahead, technology is advancing rapidly and becoming increasingly accessible worldwide. Consider smartphones as an example – we’ve seen the evolution from keypads to touchscreens, and from passcodes to face unlock.

It’s intriguing how a seemingly innocuous video, when manipulated or misused, can sow chaos. The internet hosts videos that blur the line between reality and fiction. Despite the difficulty in discerning their authenticity, subtle details like facial structure, expressions, and iris features enhance their realism.

This underscores the looming threat of deepfakes to cybersecurity.

To counteract such risks, various governments have enacted laws targeting this technology, aiming to prevent its malicious use.

In 2019, the World Intellectual Property Organization (WIPO) took a stand by submitting a draft that specifically addresses the challenges posed by deepfakes and proposes measures to address them.

How Dangerous Are Deepfakes?

Deepfakes, while generally operating within legal boundaries, pose significant risks that span various areas:

1. There’s a potential for blackmail and damage to someone’s reputation, potentially leading to legal challenges for the individuals affected.

2. The dissemination of political disinformation becomes a concern, especially when threat actors from nation-states leverage this technology for malicious purposes.

3. Elections could face interference, manifesting as the creation of fabricated videos featuring candidates, raising concerns about the integrity of the democratic process.

4. Stock markets may be susceptible to manipulation, with counterfeit content created to influence stock prices, posing potential economic risks.

5. Instances of fraud may arise, involving the impersonation of individuals to illicitly access financial accounts and other personally identifiable information (PII). These risks collectively underscore the need for vigilance and regulatory measures in managing the impact of deepfake technology.

The Real Dangers of Deepfake AI

DeepFakes are currently employed across a spectrum of applications, encompassing the amusing, the questionable, and the potentially harmful. Numerous videos crafted through DeepFake technology exist purely for entertainment purposes without posing any harm.

Take, for instance, the amusing scenario of Jon Snow expressing remorse for Game of Thrones. Moreover, DeepFakes have found their way into the film industry, as seen when a youthful version of Harrison Ford was seamlessly integrated into Han Solo’s character in Solo: A Star Wars Story.

However, there’s an escalating trend in the malevolent use of DeepFakes.

Shockingly, around 96% of DeepFakes circulating online fall into the explicit category, featuring manipulated images of celebrities or well-known women overlaid onto the faces of adult film actors. This poses a significant threat, particularly to women who become unintended targets of such deceitful practices.

Looking ahead, the growing menace of DeepFakes extends beyond explicit content. A looming concern is the erosion of trust in visual and auditory information.

Distinguishing between authentic and manipulated photos or videos is becoming increasingly challenging. This erosion of trust carries substantial implications; for instance, legal proceedings may encounter difficulties in verifying the authenticity of evidence.

Furthermore, DeepFakes pose a potential threat to security systems relying on facial or voice recognition. As technology advances, there’s a risk that these systems could be deceived by sophisticated DeepFake manipulations.

The unsettling prospect of not being able to discern whether a person on the other end of a phone call is genuine or merely a voice-and-face emulation created through DeepFake technology adds another layer of concern to the evolving landscape of digital deception.

How to Spot A Deepfake?

Spotting deepfakes has become increasingly challenging due to the advancing sophistication of the technology behind their creation.

Back in 2018, a U.S. research team illustrated that deepfake faces lacked the natural blinking observed in humans, offering a promising method for distinguishing real from fake images and videos.

However, as soon as this insight was shared, deepfake creators promptly addressed the issue, refining their technology to make detection even more formidable. Ironically, efforts to develop tools for identifying deepfakes often unintentionally contribute to the improvement of deepfake technology itself.

For the average person, recognizing a deepfake without the aid of Artificial Intelligence can be an arduous task. Yet, a discerning eye can still catch telltale signs of fakery. Mismatched ears, teeth, and eyes with the facial outline, inconsistent lip-syncing, difficulty in replicating individual strands of hair, and an unnaturally smooth facial appearance are common red flags.

Nevertheless, the relentless progress in technology is making it increasingly challenging to distinguish deepfakes from reality, as they continually evolve to appear more authentic.

In this scenario, the role of spotting deepfakes is gradually shifting towards Artificial Intelligence, with major tech companies heavily investing in the development of technologies capable of identifying these deceptive creations in photos and videos.

Conclusion

The rise of deep fakes is considered a significant societal threat owing to their capacity to generate deceptive content. Various governmental bodies have enacted legislation to tackle these issues.

As technology advances, it becomes increasingly sophisticated, emphasizing the importance of ethical usage.

Prominent figures in leadership express genuine concern about the 21st-century peril posed by AI, particularly deepfake technology. The apprehension stems from its potential for malicious purposes, such as deceit and manipulation. As AI evolves, it is crucial to exercise caution, ensuring its responsible and secure utilization.

Establishing and enforcing rules is imperative to prevent harm caused by its misuse in our global community.

People Also Read:

How ethical is AI and things you need to know.

Can AI Replace Marketing Jobs soon?

Top 10 AI Tools For Business Productivity.